Ad Creative Testing Guide 2026

Master ad creative testing in 2026. Learn systematic A/B testing frameworks, multivariate approaches, and how to use AI tools to optimize ad performance.

Key Takeaways

- 1**Test systematically, not randomly** — Random creative testing produces random insights

- 2**Isolate variables** — Change one thing at a time to know what actually worked

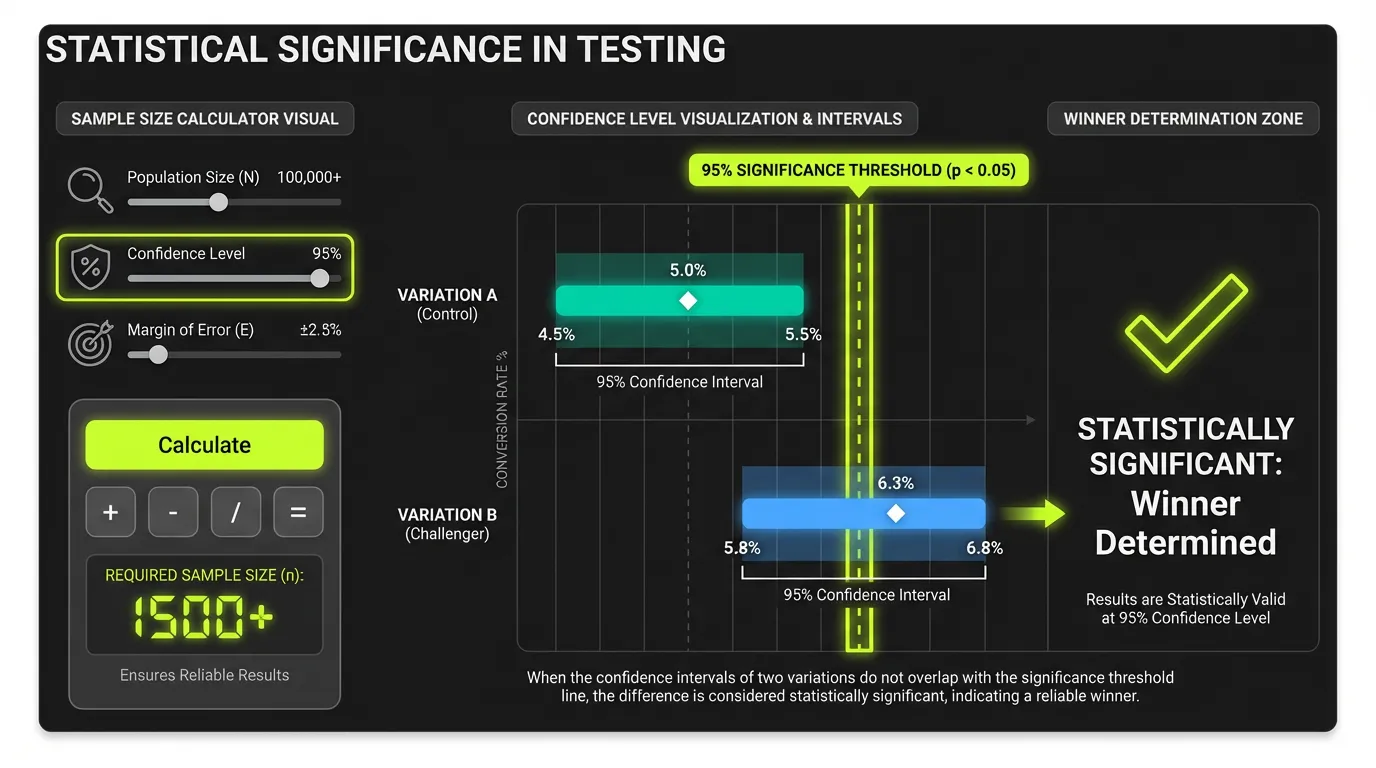

- 3**Statistical significance matters** — Stop tests when you have enough data, not when you see a trend

- 4**Creative fatigue is real** — Today's winner is tomorrow's underperformer

Key Takeaways

- Test systematically, not randomly — Random creative testing produces random insights

- Isolate variables — Change one thing at a time to know what actually worked

- Statistical significance matters — Stop tests when you have enough data, not when you see a trend

- Creative fatigue is real — Today's winner is tomorrow's underperformer

- AI accelerates, not replaces — Use AI for generation and analysis, humans for strategy

Why Creative Testing Matters in 2026

The advertising landscape has shifted creative from nice-to-have to must-have.

The Privacy-Creative Connection

| What Changed | Creative Impact |

|---|---|

| Cookie deprecation | Can't rely on behavioral targeting precision |

| Signal loss (ATT/etc.) | Algorithms need better creative signals |

| Audience saturation | Reaching same people, need different message |

| Rising CPMs | Creative efficiency is only cost lever |

Creative Performance Distribution

Performance vs Average (same audience, bid, budget)

Top 10% creative: ████████████████░░░░ 3.2x

Top 25% creative: ████████████░░░░░░░░ 1.8x

Average creative: ██████░░░░░░░░░░░░░░ 1.0x

Bottom 25% creative: ███░░░░░░░░░░░░░░░░░ 0.5x

Bottom 10% creative: █░░░░░░░░░░░░░░░░░░░ 0.2x

3-5x performance gap between best and worst

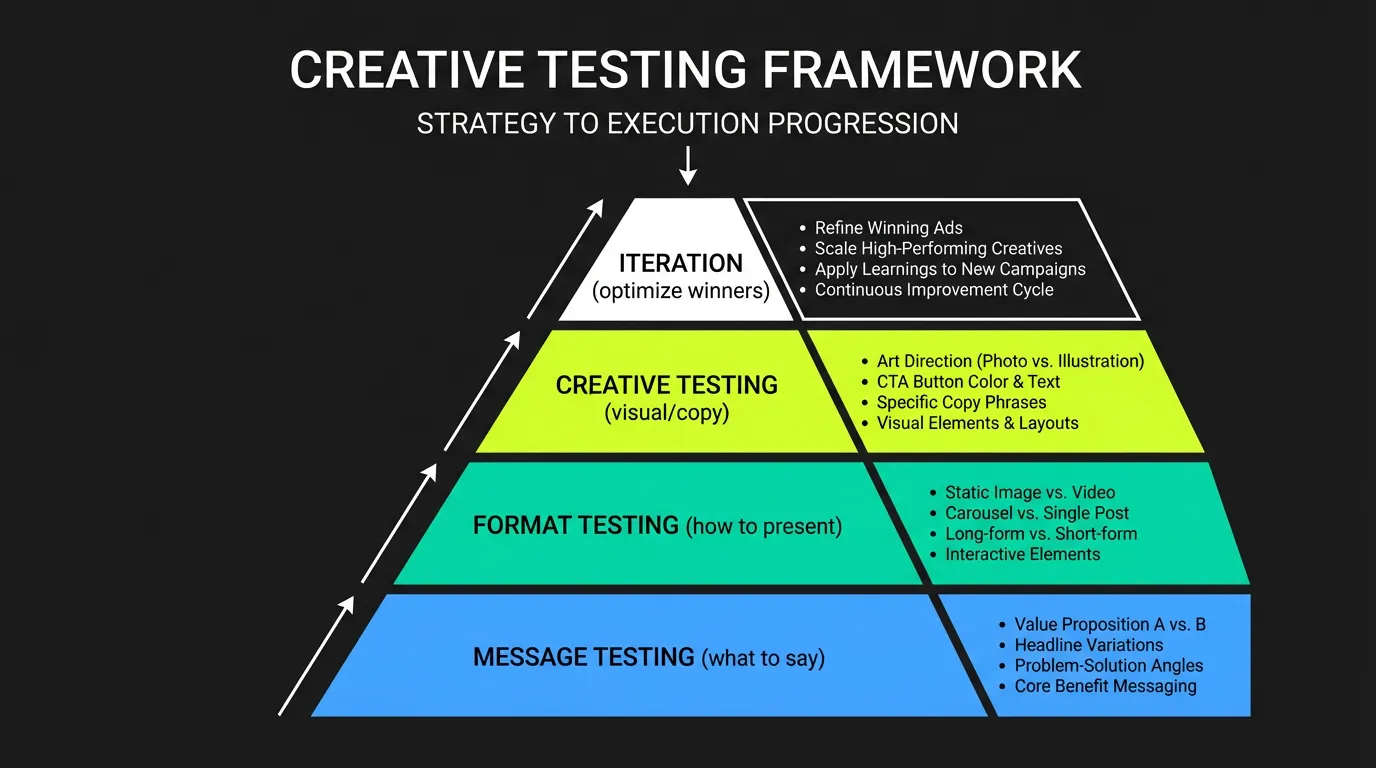

The Creative Testing Framework

Systematic testing produces actionable insights.

Step 1: Define Testing Priorities

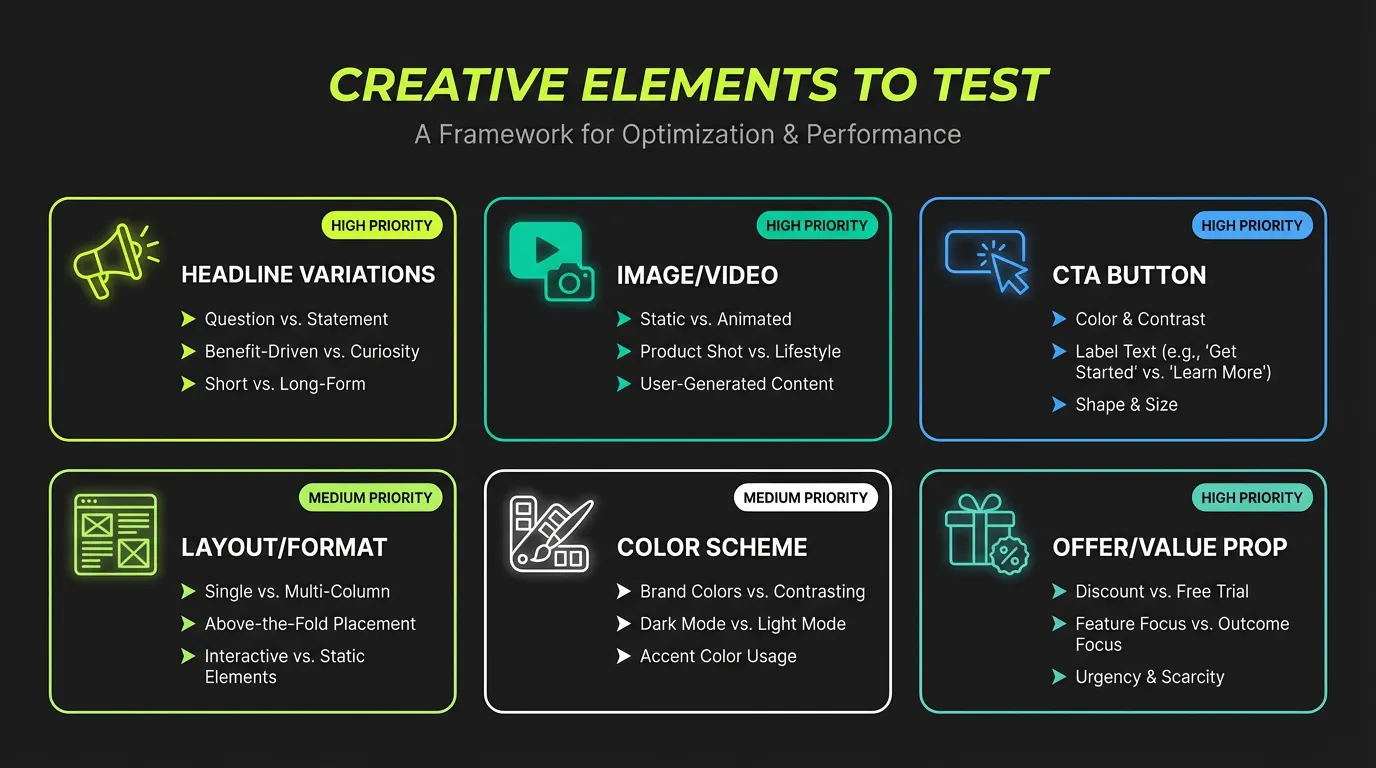

Not all creative elements have equal impact:

| Element | Typical Impact | Test Priority |

|---|---|---|

| Hook (first 3 seconds) | Very High | 1st |

| Main message/offer | Very High | 1st |

| Visual style | High | 2nd |

| Call-to-action | High | 2nd |

| Copy length | Medium | 3rd |

| Color scheme | Medium | 3rd |

| Text placement | Low | 4th |

| Minor design tweaks | Low | 4th |

Step 2: Isolate Variables

Wrong approach: Test multiple changes at onceVersion A: Blue background, "Save 20%", testimonial, Shop Now CTA

Version B: Red background, "Free shipping", product demo, Buy Now CTA

Result: B wins

Learning: ??? (don't know what caused the win)

Test 1: Background color

├── A: Blue background + standard elements

└── B: Red background + standard elements

Result: Blue wins

Test 2: Offer messaging (on winning background)

├── A: Blue + "Save 20%" + standard elements

└── B: Blue + "Free shipping" + standard elements

Result: "Free shipping" wins

Learning: Clear insights for each element

Step 3: Determine Sample Size

Statistical significance requires sufficient data:

| Metric | Minimum Events for Significance |

|---|---|

| Impressions | 10,000+ per variant |

| Clicks | 100+ per variant |

| Conversions | 30+ per variant |

| Value | Depends on variance |

Step 4: Set Test Duration

Balance speed with validity:

Minimum: 7 days (capture day-of-week variation) Typical: 14-21 days Complex tests: 30+ daysFactors affecting duration:

- Traffic volume

- Conversion rate

- Number of variants

- Confidence level needed

Types of Creative Tests

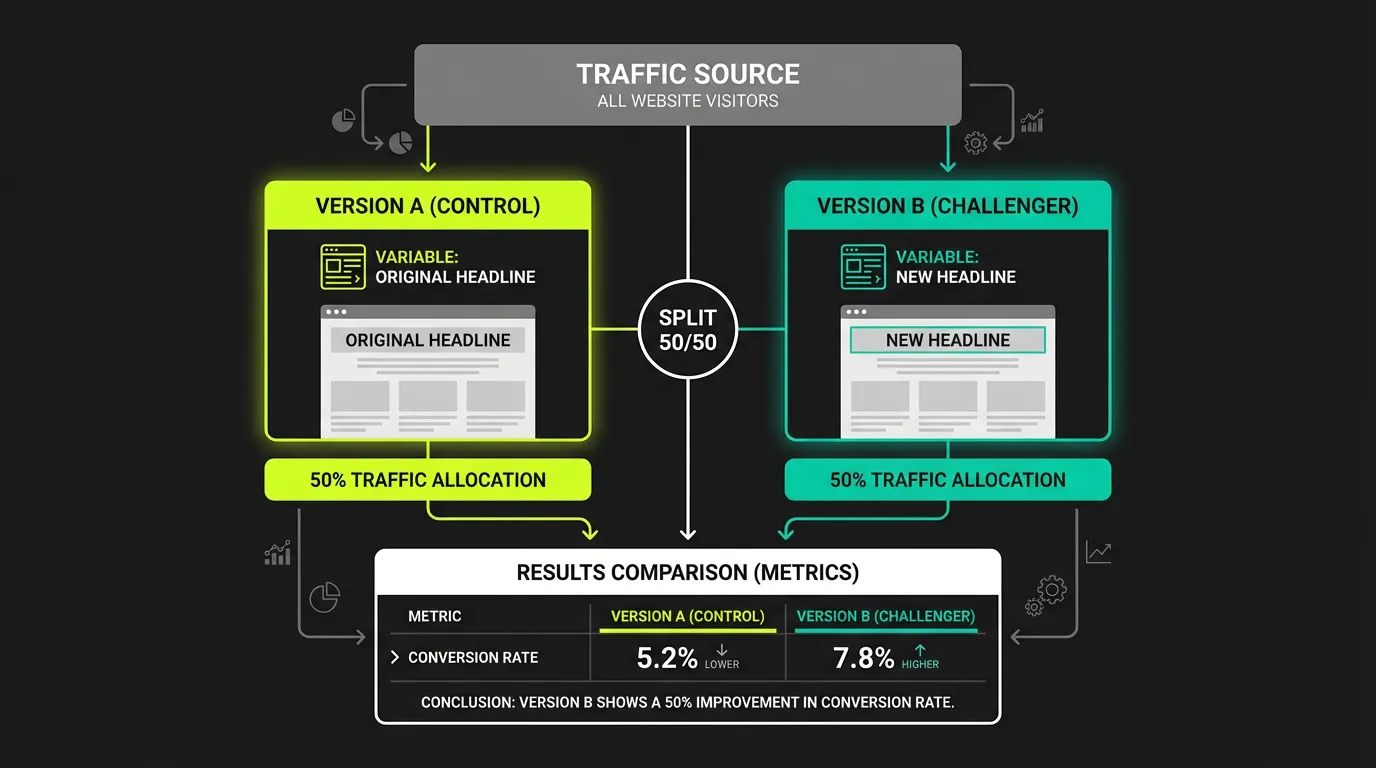

A/B Testing

Two variants, one variable difference.

Best for:- Headline testing

- CTA testing

- Offer comparison

- Single element optimization

Test Setup:

├── Control (A): Current best performer

├── Challenger (B): One variable changed

├── Split: 50/50 traffic

└── Duration: Until significance

Analysis:

- Winner: Statistically better performer

- Learnings: Why it won (hypothesis validation)

Multivariate Testing (MVT)

Test multiple elements simultaneously.

Best for:- Finding optimal combinations

- Understanding element interactions

- Mature testing programs

Sequential Testing

Test in stages, building on winners.

Stage 1: Test 3 concepts

Winner: Concept B

Stage 2: Test 3 hooks (using Concept B)

Winner: Hook 2

Stage 3: Test 3 CTAs (using Concept B + Hook 2)

Winner: CTA 3

Final: Optimized creative combining all winners

Holdout Testing

Measure incremental lift of new creative.

Test Group: See new creative

Control Group: Don't see ads (or see old creative)

Measure: Conversion difference between groups

Result: True incremental impact of creative change

Platform-Specific Testing

Meta Ads Testing

Dynamic Creative:- Upload multiple headlines, images, descriptions

- Algorithm tests combinations

- Reports winning elements

- Breakdown by creative element

- Asset-level performance

- Creative fatigue indicators

Google Ads Testing

Responsive Search Ads:- 15 headlines, 4 descriptions

- Automatic combination testing

- Asset performance labels (Best, Good, Low)

- Multiple images, headlines, descriptions

- Auto-generated combinations

- Performance by asset

- Video experiments in YouTube

- A/B test different videos

- Brand lift measurement

TikTok Testing

Split Test:- Upload multiple videos

- AI generates variations

- Automatic optimization

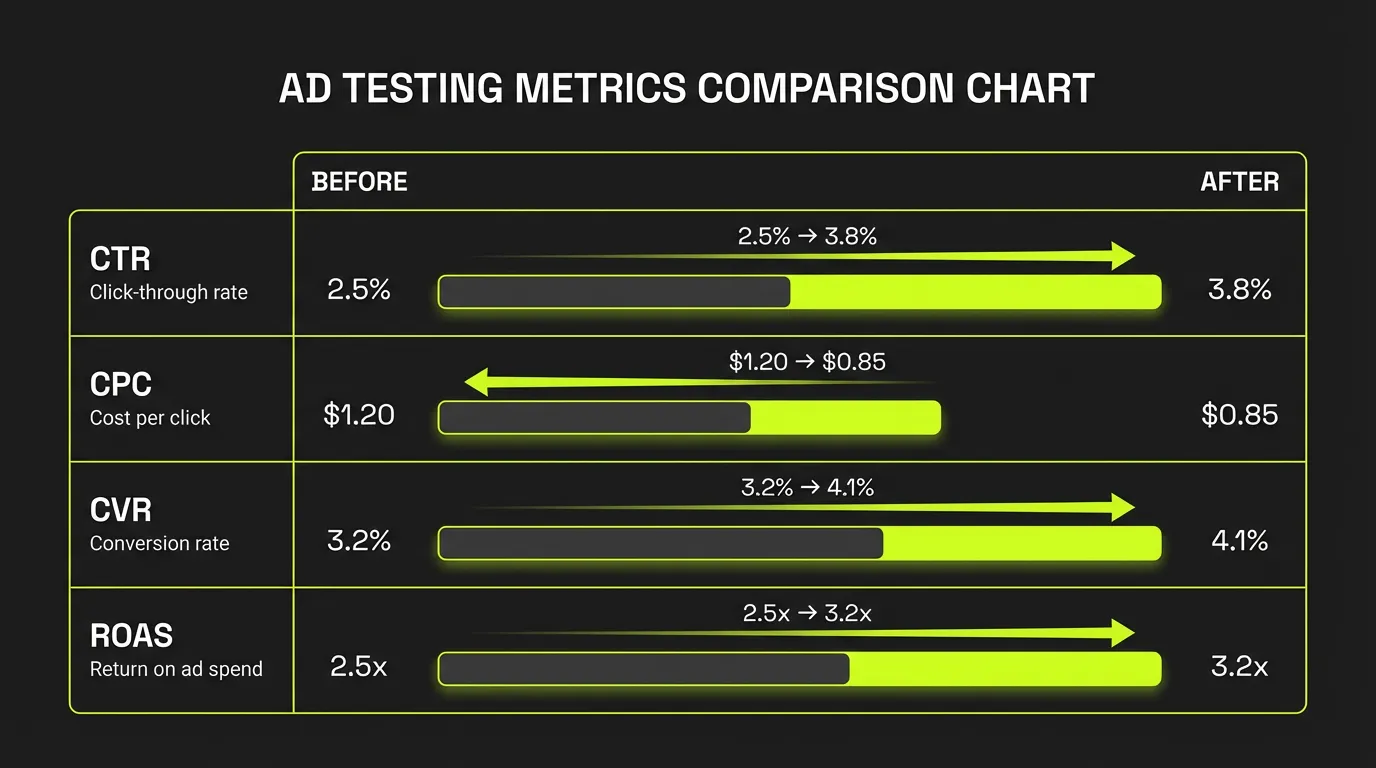

Creative Testing Metrics

Primary Metrics

| Metric | What It Tells You | Watch For |

|---|---|---|

| CTR | Ad attractiveness | > 1% for display, > 3% for social |

| VTR | Video engagement | > 25% for short-form |

| Hook rate | First 3 sec hold | > 50% for video |

| CPC | Click efficiency | Lower is better |

| CVR | Conversion effectiveness | Compare vs benchmark |

| CPA/ROAS | Business outcome | Ultimate measure |

Creative Health Metrics

| Metric | Fatigue Signal |

|---|---|

| CTR trend | Declining week over week |

| Frequency vs CTR | CTR drops as frequency rises |

| Comment sentiment | More negative reactions |

| Share rate | Declining over time |

AI-Powered Creative Testing

AI Creative Generation

Use AI tools to create test variants:

Image generation:- Midjourney, DALL-E for concept imagery

- Canva AI, Adobe Firefly for design variations

- Product photography generation

- GPT-4 for headline variants

- Claude for long-form copy

- Platform AI writers (Meta, Google)

- Runway, Pika for video creation

- Synthesia for spokesperson videos

- Auto-caption and editing tools

AI Performance Prediction

Some tools predict creative performance before launch:

How it works:- Predictions aren't perfect

- Novel concepts may score low but perform well

- Still need actual testing for validation

AI-Driven Optimization

Platform AI handles micro-optimization:

Human Role:

├── Define strategy and brand guidelines

├── Create diverse creative concepts

├── Set business goals and constraints

└── Analyze learnings and iterate

AI Role:

├── Test combinations at scale

├── Optimize delivery by audience/placement

├── Identify winning elements

└── Predict fatigue and recommend refresh

Creative Testing Best Practices

1. Test Concepts, Not Executions

Wrong: Testing small variations of same ideaTest A: "Save 20% today"

Test B: "20% off today"

Test C: "Today: 20% savings"

Test A: Price-focused ("Save 20% today")

Test B: Benefit-focused ("Sleep better tonight")

Test C: Social proof ("Join 10,000 happy customers")

2. Maintain Creative Volume

Ideal creative volume by spend:

| Monthly Spend | Active Creatives |

|---|---|

| < $10K | 5-10 |

| $10K-50K | 10-20 |

| $50K-200K | 20-40 |

| $200K+ | 40+ |

3. Document Everything

Create a testing log:

| Test | Hypothesis | Winner | Lift | Learning |

|---|---|---|---|---|

| Hook test | Fast hook > slow | Fast | +34% CTR | First 2 seconds critical |

| Offer test | Free shipping > discount | Free ship | +22% CVR | Our audience values convenience |

| Format test | Carousel > static | Static | +18% CTR | Simple beats complex here |

4. Refresh on Schedule

Don't wait for fatigue—plan creative refresh:

| Creative Type | Refresh Cycle |

|---|---|

| Static image | Every 2-4 weeks |

| Carousel | Every 4-6 weeks |

| Video (short) | Every 4-6 weeks |

| Video (long) | Every 6-8 weeks |

| UGC | Every 2-3 weeks |

Advanced Testing Strategies

Cross-Platform Testing

Same creative performs differently across platforms:

Creative A Performance:

├── Meta: CTR 1.8%, CVR 3.2%

├── Google Display: CTR 0.6%, CVR 1.1%

├── TikTok: CTR 2.4%, CVR 2.8%

└── YouTube: VTR 42%, CVR 1.9%

Learning: Need platform-specific optimization

Audience × Creative Testing

Test creative by audience segment:

| Creative | New Visitors | Retargeting | Lookalikes |

|---|---|---|---|

| Educational | ★★★ | ★ | ★★ |

| Social proof | ★★ | ★★★ | ★★★ |

| Offer-focused | ★ | ★★★ | ★★ |

| Brand | ★★★ | ★ | ★★★ |

Different audiences respond to different messages.

Funnel Stage Testing

Creative needs change by funnel position:

| Stage | Creative Focus | Test Elements |

|---|---|---|

| Awareness | Hook, brand | Attention-grabbers |

| Consideration | Benefits, features | Educational content |

| Decision | Offers, urgency | CTAs, pricing |

| Loyalty | Value, appreciation | Exclusive offers |

Common Testing Mistakes

1. Declaring Winners Too Early

"We ran the test for 3 days and B is winning by 20%!"

Reality: Early results are noisy. Wait for statistical significance or you'll "learn" random variance.2. Testing Too Many Variables

"We tested new image, new copy, new CTA, and new targeting"

Reality: Can't attribute results to any specific change. Insights are useless for future creative.3. Ignoring Losing Tests

"Test B lost, let's move on"

Reality: Losses contain learning. WHY did it lose? What does that tell you about your audience?4. Creative Tunnel Vision

"Our best performer is a product image, so we only test product images"

Reality: Best current approach isn't necessarily best possible. Test different concepts occasionally.5. No Control Group

"All our creatives are 'new' so we can't have a control"

Reality: Always keep a proven control to benchmark against. Otherwise, you don't know if new creative is actually better.The Bottom Line

Creative testing in 2026 is systematic science, not random guessing:

> "In the privacy-first era, you can't out-target competitors. But you can out-creative them. Systematic testing is the path to creative advantage."

AdBid helps you track creative performance across platforms. See which creative drives results and when it's time to refresh. Start your creative analysis.

Tags

Ready to optimize your ad campaigns?

Try AdBid free for 14 days. No credit card required. See how AI-powered optimization can transform your advertising.

Related Articles

Essential Guide to Building a Creative Testing Framework

Meta Andromeda: The Complete Guide for Media Buyers (2026)

When to Duplicate Facebook Ads: Testing & Scaling

Creative Strategy: High-Impact Campaign Framework

Creative Fatigue: Signs, Causes & Solutions 2026

Landing Page Optimization: Strategies for 2026

More in Guides

View all →

The Complete Guide to AI-Powered Ad Optimization in 2025

Understanding Meta Advantage+ Sales Campaigns: 2025 Guide

Meta Advertising Policies in 2025: What You Need to Know

How to Scale Mobile App Advertising in 2025

The Ultimate Guide to Google Ads Automation Tools