A/B Testing for Advertising 2026

Master A/B testing for digital advertising. From statistical significance to AI-enhanced testing. Businesses implementing systematic testing see 25-40%...

Key Takeaways

- 1Businesses implementing systematic A/B testing see 25-40% ROAS improvement in first quarter

- 2AI-enhanced testing (like Bing's implementation) showing 25% revenue increases

- 3A/B testing market projected to reach $1.25B by 2028 (11.5% CAGR)

- 4Statistical significance at 95% confidence essential for valid conclusions

Key Takeaways

- Businesses implementing systematic A/B testing see 25-40% ROAS improvement in first quarter

- AI-enhanced testing (like Bing's implementation) showing 25% revenue increases

- A/B testing market projected to reach $1.25B by 2028 (11.5% CAGR)

- Statistical significance at 95% confidence essential for valid conclusions

- Test one variable at a time — the golden rule still applies

Why A/B Testing Matters More Than Ever

The advertising landscape has become more complex and competitive:

| Challenge | Why Testing Helps |

|---|---|

| Rising CAC | Find more efficient creative/targeting |

| Shorter attention spans | Identify what hooks fastest |

| Privacy restrictions | Understand what works despite less data |

| Platform algorithm changes | Adapt quickly to new realities |

| Creative fatigue accelerating | Know when to refresh |

Businesses implementing systematic testing protocols typically see a 25-40% improvement in ROAS within the first quarter.

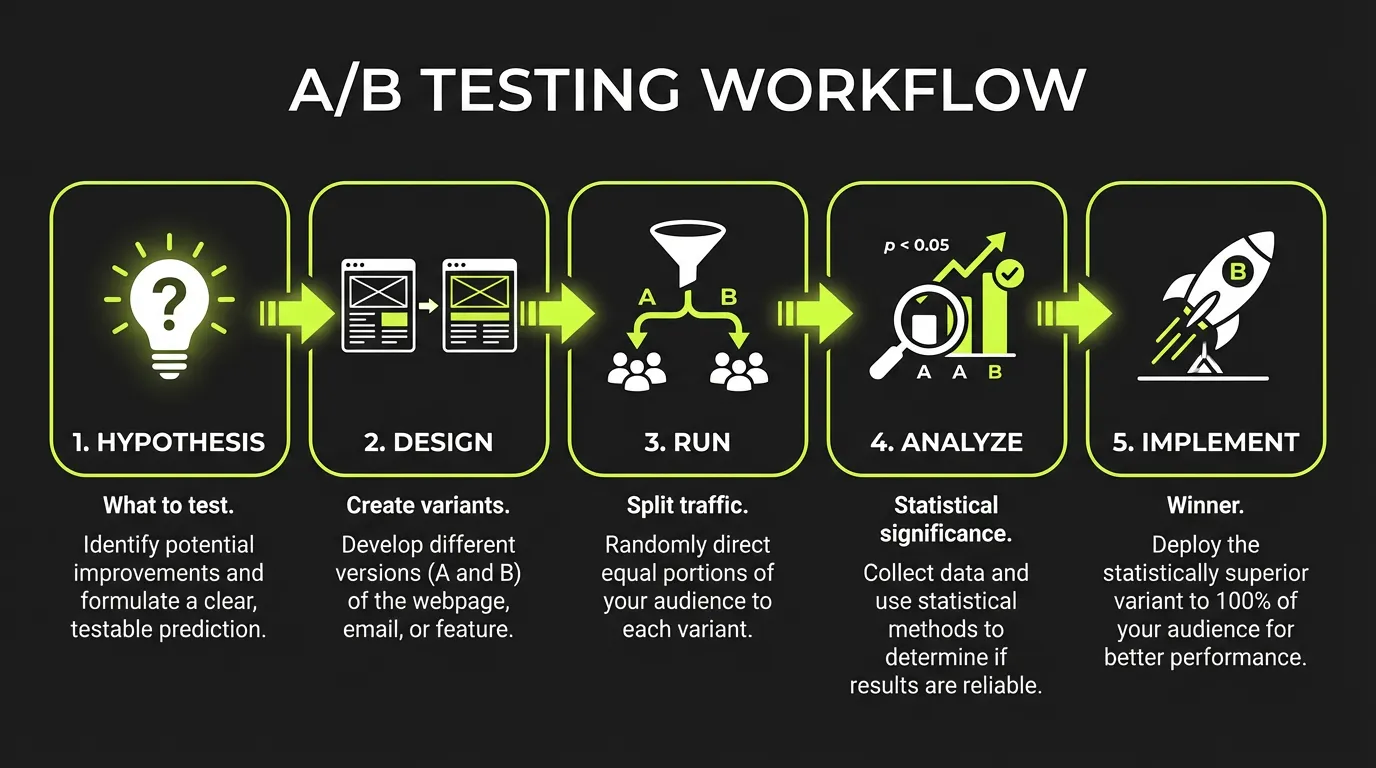

The Fundamentals of A/B Testing

What Is A/B Testing?

A/B testing (split testing) compares two versions of an element to determine which performs better:

- Version A (Control): Current approach

- Version B (Variant): Modified approach

- Metric: The outcome you're measuring

- Statistical significance: Confidence the result isn't random

The Golden Rules

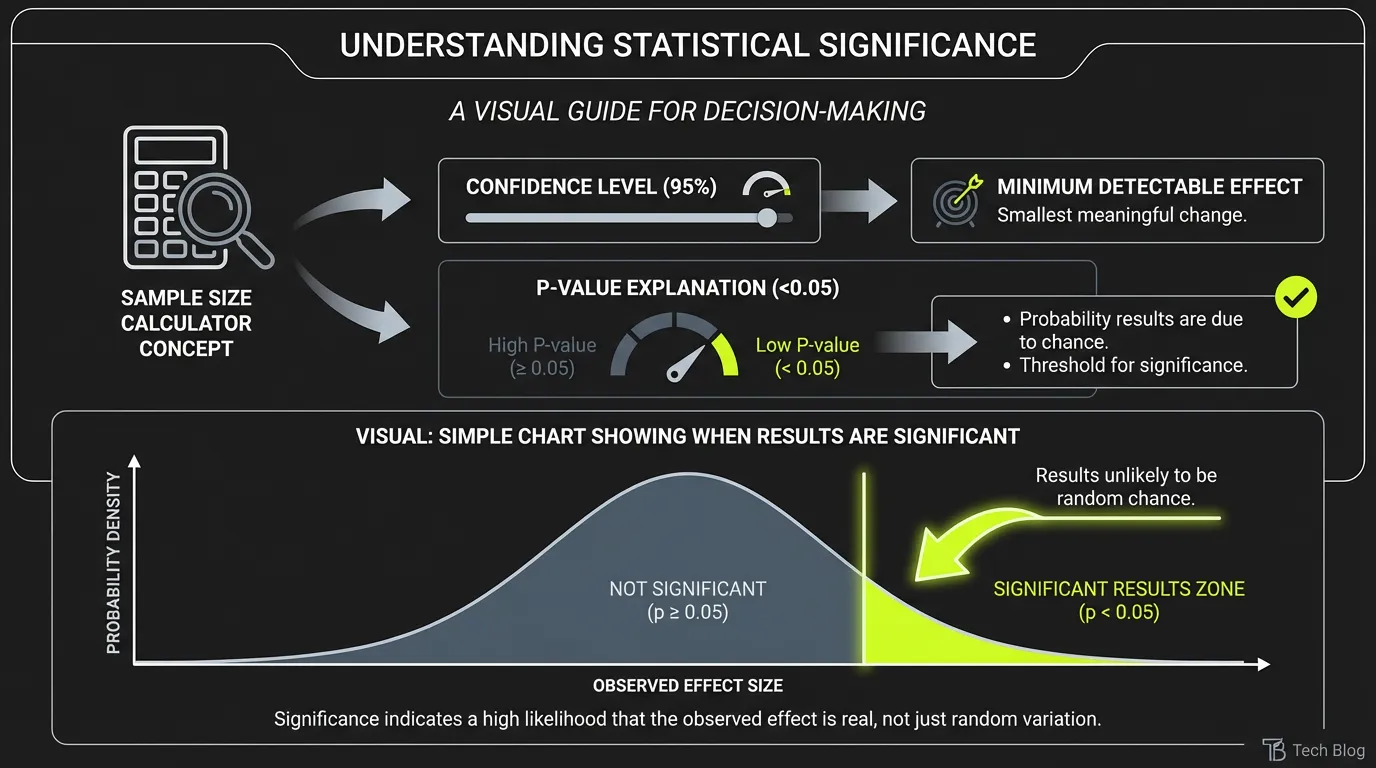

Sample Size Calculation

Before testing, determine required sample size based on:

- Current conversion rate

- Minimum detectable effect (MDE)

- Statistical power (typically 80%)

- Significance level (typically 95%)

What to Test in Advertising

Creative Elements

| Element | Priority | Typical Impact |

|---|---|---|

| Headlines | High | 20-50% CTR change |

| Images/video | High | 30-100% performance change |

| CTA buttons | High | 15-30% conversion change |

| Body copy | Medium | 10-20% engagement change |

| Social proof | Medium | 15-25% conversion change |

| Color schemes | Low | 5-15% CTR change |

Ad Copy Tests

Test these copy elements systematically:

Headlines:- Benefit-focused vs. feature-focused

- Question vs. statement

- Numbers vs. no numbers

- Short vs. long

- Emotional vs. logical appeal

- Urgency vs. value proposition

- Social proof placement

- Problem-agitation-solution structure

Visual Tests

Image variations:- People vs. no people

- Lifestyle vs. product-focused

- Single product vs. multiple

- Light vs. dark backgrounds

- Faces looking at camera vs. at product

- Hook style (question, statistic, statement)

- Length (15s vs. 30s vs. 60s)

- Pacing (fast cuts vs. smooth)

- CTA placement and timing

- Music vs. voiceover

Targeting Tests

Audience testing:- Broad vs. specific targeting

- Interest-based vs. behavioral

- Lookalike percentages (1% vs. 5%)

- Custom audiences vs. prospecting

- Feed vs. Stories vs. Reels

- Mobile vs. desktop

- Automatic vs. manual placements

Bid and Budget Tests

Strategy testing:- Manual vs. automated bidding

- CPA vs. ROAS optimization

- Budget levels and scaling approaches

Platform-Specific Testing Features

Meta Ads A/B Testing

Meta's Experiments feature allows controlled tests:

- Go to Experiments in Ads Manager

- Choose A/B Test

- Select variables to test

- Set duration and success metric

- Launch and wait for significance

Google Ads Experiments

Google's campaign experiments split traffic:

- Use 50/50 traffic split

- Run for minimum 2 weeks

- Test during stable periods (avoid Black Friday)

TikTok Split Testing

TikTok Ads Manager split testing options:

- Creative A/B testing

- Targeting A/B testing

- Bidding and optimization testing

Statistical Rigor

Understanding Confidence Intervals

A 95% confidence level means there's only a 5% chance your result is random.What confidence levels mean:

- 90% — Acceptable for directional learning

- 95% — Standard for decision-making

- 99% — Required for high-stakes changes

Common Statistical Mistakes

:::danger Avoid These Errors

:::

Sequential Testing

For faster results with statistical validity:

- Use sequential testing methods (group sequential design)

- Pre-specify interim analysis points

- Adjust significance thresholds for multiple looks

- Tools like Optimizely handle this automatically

AI-Enhanced A/B Testing

How AI Changes Testing

The integration of AI has revolutionized optimization:

Bing reported a 25% increase in ad revenue through AI-enhanced testing methods.AI testing capabilities:

- Automatic variant generation

- Faster significance detection

- Multi-armed bandit optimization

- Predictive performance modeling

- Automated creative iteration

When to Use AI vs. Traditional Testing

| Use AI Testing | Use Traditional A/B |

|---|---|

| High volume, many variants | Few variants, need certainty |

| Continuous optimization | One-time decisions |

| Creative rotation | Major strategy changes |

| Performance marketing | Brand campaigns |

Building a Testing Culture

Testing Framework

Systematic approach to testing:

Phase 1: Hypothesis- What do you believe will happen?

- Why do you believe it?

- What evidence supports this?

- One variable isolation

- Sample size calculation

- Duration planning

- Success metrics definition

- Launch A/B test

- Monitor for technical issues

- No peeking at results

- Document everything

- Check statistical significance

- Calculate confidence intervals

- Segment results (device, audience, placement)

- Document learnings

- Roll out winner (if significant)

- Plan next test based on learnings

- Update knowledge base

Testing Roadmap Template

| Quarter | Focus Area | Tests | Expected Impact |

|---|---|---|---|

| Q1 | Headlines | 12 tests | 15% CTR improvement |

| Q2 | Creative format | 8 tests | 20% engagement lift |

| Q3 | Audience targeting | 6 tests | 10% ROAS improvement |

| Q4 | Landing pages | 10 tests | 25% CVR improvement |

Measuring Success

Test Analysis Checklist

✅ Statistical significance reached (95%+ confidence) ✅ Adequate sample size achieved ✅ Test ran long enough (7+ days minimum) ✅ No external factors contaminating results ✅ Results consistent across segments ✅ Practical significance (not just statistical)What to Do With Results

When test wins:- Implement at scale

- Document the learning

- Plan iteration tests

- Share with team

- Understand why

- Document the learning

- Try different approach

- Don't give up on hypothesis entirely

- Need more traffic/time

- Variable may not matter much

- Move to higher-impact tests

The Bottom Line

Effective A/B testing in 2026 requires:

The gap between guessing and knowing is your competitive advantage.

AdBid helps you track A/B test performance across platforms. See which creative variations drive real business results. Start optimizing.

Ready to optimize your ad campaigns?

Try AdBid free for 14 days. No credit card required. See how AI-powered optimization can transform your advertising.

Related Articles

Essential Guide to Building a Creative Testing Framework

Landing Page Optimization: Strategies for 2026

CRO Guide 2026: Data-Driven Strategies

Ad Creative Testing Guide 2026

Performance Marketing Guide 2026

Growth Marketing Guide 2026

More in Guides

View all →

The Complete Guide to AI-Powered Ad Optimization in 2025

Understanding Meta Advantage+ Sales Campaigns: 2025 Guide

Meta Advertising Policies in 2025: What You Need to Know

How to Scale Mobile App Advertising in 2025

The Ultimate Guide to Google Ads Automation Tools